Abstract

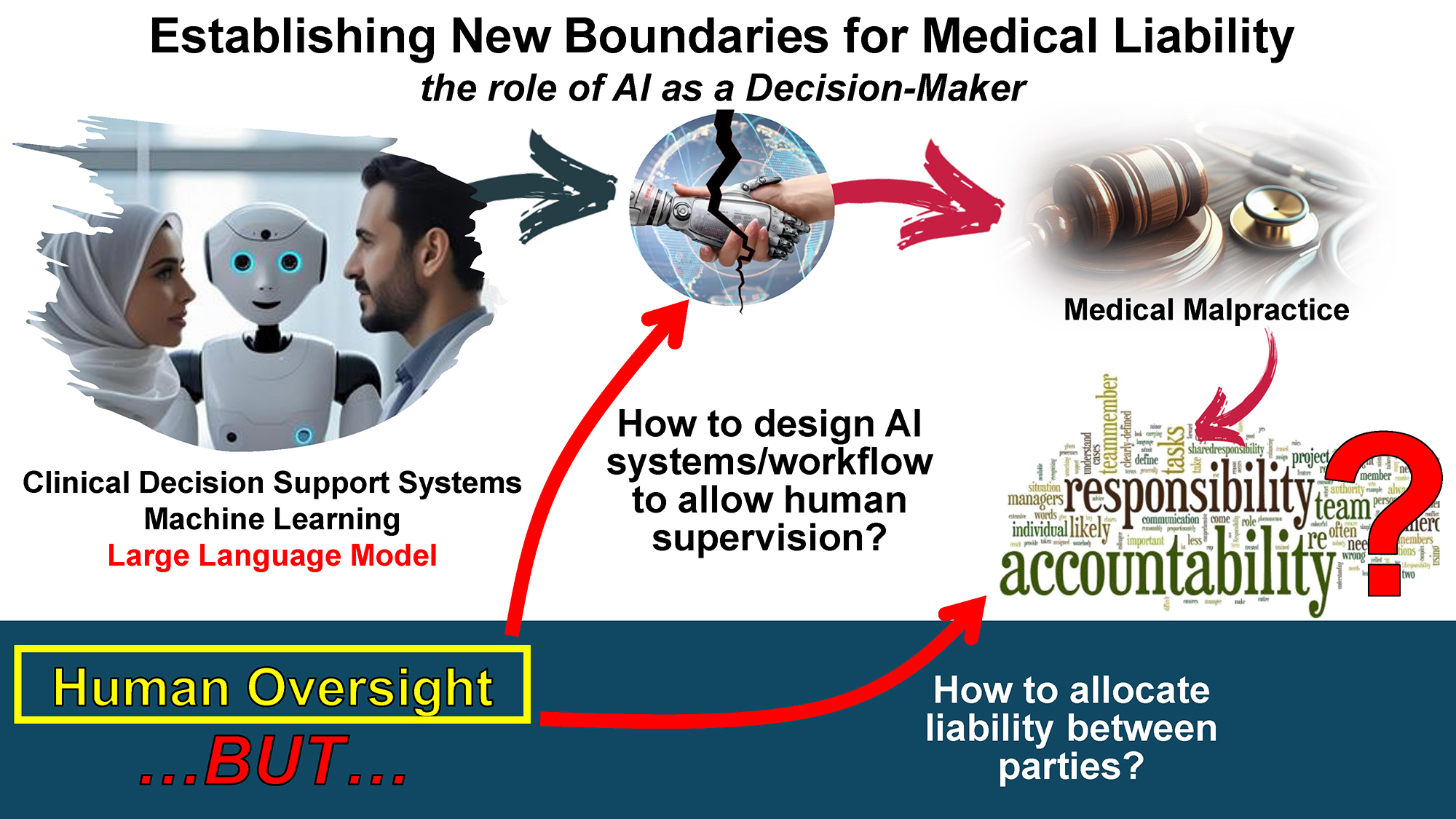

The introduction of artificial intelligence (AI) in healthcare has created novel challenges for the field of medical malpractice. As healthcare professionals increasingly rely on AI in their decision-making processes, traditional medicolegal assessments may struggle to adapt. It is essential to examine AI’s role in clinical care – both its current applications and future advancements – to clarify accountability for diagnostic and therapeutic errors. Clinical decision support systems (CDSSs), in particular, unlike other traditional medical technologies, work as co-decision makers alongside physicians. They function through the elaboration of patient information, medical knowledge, learnt patterns, etc., to generate a decision output (e.g., the suggested diagnosis), which should then be evaluated by the physician. In light of the AI Act, CDSSs cannot function fully autonomously, but instead physicians are to be assigned an oversight role. It is questionable, however, whether it would always be appropriate to assign full responsibility, and consequently liability, to the physician. This would be especially true if oversight is limited to reviewing outputs generated by the CDSS in a manner that leaves no real control in the hands of the physician. Future research should aim to define clear liability allocation frameworks and design workflows that ensure effective oversight, thereby preventing unfair liability burdens.

Key words: medical malpractice, liability, legal medicine, lawsuit, artificial intelligence

Introduction

The issue of medical malpractice has long been recognized and continues to proliferate across countries, imposing considerable costs and burdens on healthcare systems and personnel.1 Medical liability is typically a fault-based system, where, in the event of harm, a healthcare professional may be held liable if their actions are found to deviate from the accepted standard of care. The primary focus is determining whether the provider’s actions conformed to the established standard of care. In similar cases, courts generally rely on experts who, depending on the national system, may be either medical professionals from the relevant specialty and/or medicolegal experts. These experts are tasked with advising the court, often through written opinions or courtroom testimony, on whether the healthcare provider’s actions aligned with or fell short of established medical norms and guidelines.

Indeed, legal medicine, as an expert branch of contemporary medicine, is dedicated to assessing cases of alleged professional liability among healthcare providers, according to codified and standardized methodologies.2 This aligns with the evolution of several subdisciplines of legal medicine that address specific concerns, continually striving to adapt to the ever-evolving context in which they operate.3, 4 Nevertheless, this progress remains uneven, as evidenced by the absence of dedicated specialties that rigorously address urgent contemporary issues, such as the medicolegal implications of deploying artificial intelligence (AI), which have received scant attention to date.3 This represents a critical aspect that will progressively develop. Historically, healthcare professionals have relied on guideline recommendations and updated article databases. This is motivated by the desire to comply with the “golden standard” of evidence-based medicine, which ensures a higher level of healthcare quality and consequently, better health outcomes for the patients.5 Physicians are also further incentivized to follow such guidelines as this presents the opportunity to lower the risk of incurring possible medicolegal disputes. In the future, they are thus likely to increasingly rely on decision-making support facilitated by AI, potentially to distill the vast amount of evidence available on various topics within applied medicine, including guidelines from scientific societies.6 However, this evolution entails an additional dimension of healthcare professional responsibility, which healthcare professionals may not be adequately equipped to acknowledge and address. The integration of AI into routine clinical practice is proving to be profoundly transformative, prompting a multitude of complex and nuanced inquiries. AI-based diagnostic tools offer numerous benefits for medical practices, including the potential to achieve a uniform standard of healthcare services,7 to monitor the activities of less experienced physicians,8 etc. At the same time, though, they also raise ethical and legal issues, concerning, e.g., the possible misuse of patient data.9 Specifically, in instances where a diagnostic, or in future also therapeutic, error is committed by a physician, based on recommendations provided by AI, the determination of legal liability becomes a critical question. After identifying the error and establishing its causal link to quantified harm, the issue arises as to who bears legal responsibility.2 The medicolegal assessment, grounded in established evaluation methodologies and criteria, traditionally focuses on the decision-making processes of the healthcare professional. A pertinent question emerges: Would reliance on AI decisions, which turn out to be erroneous and cause harm to the patient, lead to liability for the healthcare provider?10 This issue is highly contentious, and scholarly debate on the topic is both extensive and varied.

As AI infiltrates more aspects of life, the issues surrounding its implementation in work processes loom over the general enthusiasm. This can be illustrated through the simple, fearsome, and popular question of: “What will be the role of AI?”. In the field of healthcare, AI-powered clinical decision support systems (CDSSs) are meant to assist physicians.11 The types of decisions that CDSSs can help with can vary vastly, but some of the most significant and crucial decisions are those that physicians perform in the context of clinical care in single-patient consultations. What CDSSs can provide support in, e.g., is deciding what diagnosis to give the patient and what treatment to prescribe.12 While current CDSS uptake is low, the latest developments in AI methodologies are likely to increase implementation. The first attempts at CDSSs were based on knowledge modeling (e.g. Mycin, developed in the 70s, was a rule-based system that aided in antibiotic selection),13 but new machine learning (ML) applications, including large language model (LLM)-based systems, seem to hold greater potential for popularity.14, 15 As CDSSs become increasingly available and relied on for clinical decision-making, the specific perimeters of the physician’s role, and consequently the precise boundaries of liability, become more and more blurry.

The differences with other non-AI medical technologies

At first glance, one might believe that CDSSs are just providing information, not unlike previously existing medical technologies, and that it is the physician who is the ultimate decision-maker, and, consequently, the party held liable. The CDSSs, though, cannot be fully compared to other medical technologies. For example, if we consider X-rays, ECGs, etc., the information they provide is limited to medical data on the patient. In this sense, these tools are just “capturing” the state of the patient. This knowledge is then entirely elaborated upon by the physician, and it helps inform decision-making among a variety of other information sources. The CDSSs go a step further: They do not capture patient information, they elaborate on it. More specifically, CDSSs combine medical information about the patient with medical knowledge and provide a recommendation to the physician, which could potentially be followed without any need for further reasoning.16 In the case of machine-learning CDSSs (ML CDSSs), they might even follow logic that falls beyond existing medical knowledge, e.g., ML CDSSs could diagnose breast cancer with great accuracy, and follow an inferred logic that is not fully understandable to physicians.17, 18 In light of all these reasons, CDSSs are not just like any other medical technology. They shall be considered decision-makers that work alongside physicians and provide them with fully elaborated and potentially definitive recommendations for diagnosis and/or treatment.10 When physicians use a CDSS, clinical decisions emerge from a collaboration between the physician and the AI system. It would seem unreasonable to place the entirety of the liability burden on the physician when they are not the only entity responsible for the decision.

It must also be considered that CDSSs can be categorized as either white-box or black-box AI, with the difference lying in the degrees of interpretability. White (or transparent) box systems are designed in a way that allows an understanding of the logic that was followed in the elaboration of the output. They are typically rule-based systems, meaning that they work through the combination of a symbolic representation of knowledge and a knowledge engine.19, 20 In contrast, black box models predominantly employ machine learning methodologies, with a particular emphasis on deep learning techniques. The process of deep learning is founded upon the training of artificial neural networks, and it requires large quantities of data to be processed through a variety of layers (the majority of which are “hidden”).19, 21 Black box systems are designed in such a manner that even technical experts are unable to fully comprehend the reasoning behind the AI’s specific output.22, 23

In terms of the relationship with the physician, white box systems do not differ significantly from traditional information tools such as risk score charts and flowcharts. Black box systems, on the other hand, are a novelty in this respect, since they work as “opaque” advisors to physicians. The lack of understanding of the machine’s processes can make it difficult to assess the meaning and reliability of the output, thus potentially resulting in lower trust levels.24 To try and overcome these issues, a growing line of research is the development of explainable AI (XAI).22 Interpretability refers to a model’s built-in transparency, whereas explainability involves post hoc techniques that translate a model’s outputs into human-understandable explanations.20, 25 The explanation provided by the explanatory algorithm, though, is by definition just an approximation of the actual prediction of the black box system, so it is bound to be imperfect and may at times even be misleading.20

The human oversight

It is also important to consider that liability rules must, in this instance, be read alongside market safety regulations. Liability norms function as ex post governance mechanisms, meaning that they intervene only after harm has occurred. On the other hand, market safety regulations operate through an ex ante approach, aiming to prevent harm by ensuring that products entering the market comply with specific safety standards.26 While safety regulations reduce the risk that a certain product will produce harm, the liability norms ensure that if harm does occur, those affected will be compensated. In the case at hand, the AI Act (Regulation 2024/1689),27 which is a safety regulation, does not directly regulate liability, but indirectly shapes liability allocation by imposing stringent safety obligations. Art. 14 of the AI Act, imposes a particular requirement for high-risk systems, including medical devices, which is that of human oversight.28, 29 The article does not provide detailed guidance on how this oversight should be exercised concretely to ensure its effectiveness. What is clear, though, is the EU’s overall position of denying full automation for systems that are “too risky” and instead imposing the presence of humans to oversee AI’s activities. This article further reinstates the role of the physician as a supervisor, which can have repercussions from a liability standpoint.30 To provide a more comprehensive legal framework, it is essential to consider how data protection laws such as the General Data Protection Regulation (GDPR)31 and health privacy regulations like the Health Insurance Portability and Accountability Act (HIPAA)32 intersect with AI liability. These frameworks regulate the lawful processing and protection of sensitive personal and health data, which are critical in AI deployment and oversight. Their intersection with AI liability shapes compliance requirements and the scope of responsibility, particularly regarding transparency, data governance, and protection of individual rights.

The human oversight requirement is particularly relevant in shaping how responsibility is distributed between AI systems and human operators. The degree of automation plays a crucial role in the distribution of task responsibility: The higher the level of human involvement, the greater their share of responsibility, and vice versa.33 For example, if a diagnosis is formulated by a physician using a diagnostic decision support system and the process involves low levels of automation, the physician is more directly involved and would therefore likely bear greater responsibility for any errors.34 In this sense, the oversight requirement not only mandates human participation but also implicitly assigns a degree of responsibility to the human operator. An increase in task responsibility generally increases the likelihood of liability being attributed to an individual as well, particularly where a failure arises within their domain of responsibility (though, of course, liability remains contingent upon the satisfaction of the relevant legal requirements).33 It is crucial, in this context, to evaluate how oversight was concretely exercised. If the conditions necessary for meaningful oversight are lacking, it can be questioned whether the responsibility (and potential liability) assigned to the physician is proportionate to their actual contribution in the decision-making process.

Imagine a scenario where a new CDSS, such as one used for early sepsis detection or oncology treatment planning, which, according to research, performs better than humans and is particularly time-efficient, is introduced in a clinic. In light of this CDSS’s characteristics, the clinic assigns physicians the role of mere “controllers”, where they only have to review the outputs of the AI. Instead of carrying out full clinical assessments themselves, physicians are expected to validate or flag the system’s suggestions. The clinic also decides that physicians must take on more patients than usual (since review tasks are less time-consuming), so their load is significantly increased compared to before. This means that the time at physicians’ disposal for the evaluation of the outputs is cut quite short, and they are forced to make decisions very swiftly. In such a situation, it seems likely that most physicians would end up simply relying on the AI (so-called automation bias).35, 36 The control task would be reduced to blindly accepting the AI’s suggestions, without any true critical thinking involved. This would be further aggravated by black-box AI, since it is challenging for physicians to understand and contextualize the reasoning behind its decisions.37

The principle of oversight is intended to mitigate the risks associated with automation by balancing the advantages and disadvantages of using AI to prevent adverse outcomes.38 In healthcare, e.g., human oversight can ensure that the shared decision-making process between patient and physician is maintained.39 Human oversight helps ensure that final decisions align with patients’ preferences and values – elements that AI systems often overlook.40, 41 This integration of AI capabilities with physicians’ expertise, experience, and emotional intelligence can be highly beneficial.42, 43 Moreover, human oversight is vital in identifying and mitigating AI errors and in preserving physician autonomy in clinical decision-making.43 Overall, human oversight is essential in ensuring that AI is used safely and effectively in healthcare. If measures for proper oversight are not employed, though, the risk is that human oversight becomes a hollow requirement that primarily serves to identify someone to be held accountable. In this sense, Elish and Hwang’s considerations on the risk of humans becoming a “liability sponge” for the mistakes of machines ring truer than ever.37 While multiple parties may be considered liable in a single malpractice case, and liability may be apportioned among them or subject to claims for contribution under joint and several liability, the human actor nonetheless risks being assigned a portion that is disproportionate to their actual involvement in the decision-making.44 As such, their liability may (at least partially) shield several other parties, including, e.g., the designers and manufacturers of the AI.45 In the context of healthcare, the physician may also take part of the fall for some issues that should actually be blamed on the hospital, such as when the workflow is designed by the hospital itself.46

To ensure that the benefits of human oversight are achieved and that physicians do not become “liability sponges”, it is crucial to implement measures to achieve effective human supervision. This can include, e.g., specific training for healthcare professionals.47, 48 Although explainability is often presented as a potential solution to automation bias, it has been shown that physicians may not always be able to use explanations effectively.49 Consequently, their decisions may still be biased, even when they are presented with explanations. Allocating adequate time to critically evaluate a system’s outputs can help mitigate the risk of automation bias.48

Conclusions

In certain contexts, CDSSs may function as collaborative decision-makers, highlighting the necessity of physician oversight calibrated to the specific system and clinical environment. Said oversight should be exercised through the adoption of appropriate measures to ensure the desired security level is achieved. It is equally important to avoid a possible scenario where physicians, while formally assigned a supervision role, are not given the necessary conditions to effectively and concretely exercise control, thereby potentially facing an undue liability burden.44 While the provision of human oversight shall be welcomed for ensuring that the physician/patient relationship is not lost, better overall health outcomes, etc., it is also essential to ensure that its concrete enactment is coherent with its inspiring principles, and that AI producers and hospitals do not take undue advantage.

A key issue is thus how to precisely define the allocation of liability among all involved parties: It is currently unclear what parameters should be considered relevant to this evaluation and how to properly assess and weigh them. The uncertainty around AI liability regimes and their concrete applicability further complicates the matter and calls for further dedicated research. On top of this, it must be questioned how to ensure that AI systems and workflow processes are both designed in a manner that enables physicians to correctly perform their oversight functions.

Forensic medicine has already found its proper role as a crucial discipline in the ascertainment and evaluation of medical malpractice cases, thus delineating individual liabilities among healthcare professionals. It remains to be seen whether forensic medicine – beyond defining standards of care in collaboration with clinical disciplines – will also be called upon to assess liability for AI systems that err or provide incorrect guidance to healthcare professionals. The stakes are significant, and the ex-ante and ex-post guarantor role that forensic medicine can play in this context is relevant.